The One Big Table

The One Big Table (OBT), often implemented as a Wide Table, is a data modeling strategy focused on maximizing performance for analytical queries.

The core idea is extreme denormalization: all necessary data - including measures, facts, and descriptive attributes - are pre-joined into a single table. This approach makes a fundamental trade-off: it accepts significant data redundancy (which uses more storage) to eliminate the need for complex, resource-intensive JOIN and Shuffle operations at query time. The heavy computational work of joining is shifted from the user’s query into the data preparation phase (ETL/ELT).

OBT is rarely the organization’s single source of truth. It typically serves as a derived, optimized consumption layer (a Data Mart) built on top of a more structured governance model, like a Dimensional Model.

Columnar storage as the catalyst

The success of OBT is tied to formats like Apache Parquet and ORC. These columnar architectures minimize the storage penalty of data redundancy through two mechanisms:

High Compression: Columnar storage groups similar data types, allowing compression algorithms to achieve dramatically better ratios than in traditional row-based systems.

Efficient Reading: Columnar query engines can read only the specific columns needed for a query, drastically reducing disk I/O and increasing speed, even if the table contains hundreds of unneeded columns.

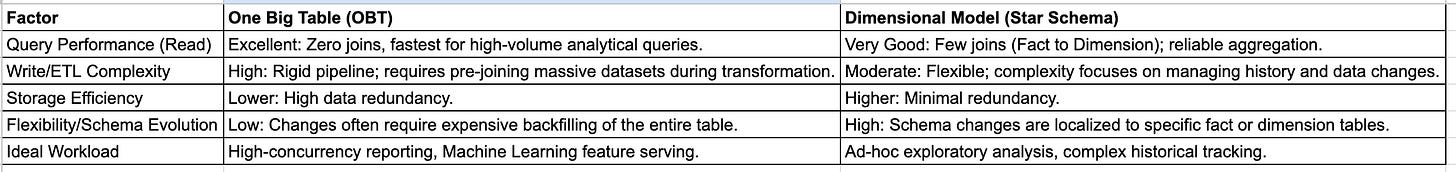

OBT vs. Star Schema

The definitive advantage of OBT is completely eliminating distributed join operations during querying, which often accelerates read operations by 10% to 45% compared to Star Schema models for high-volume analytics. This performance gain is critical in heavily read-dominant environments where users demand immediate results.

However, the Dimensional Model remains crucial for data governance. It is easy to build a high-speed OBT from a stable dimensional model, but it is structurally challenging to accurately derive a consistent dimensional model from a rigid OBT.

Best Practices for OBT Schema Design

Physical Implementation and Data Freshness

In data lake environments, OBTs should be materialized using columnar formats like Apache Parquet for optimal read performance. Because the pipeline to create the OBT is resource-intensive, data engineers must ensure the process is highly robust to guarantee that the final, fast-querying OBT contains accurate, up-to-the-minute data.

Partitioning and Indexing Strategies (Wide-Column Stores)

In specialized wide-column NoSQL systems (like Bigtable), OBT performance is entirely determined by the row key - the single index used for retrieval.

Best practices for row key design include:

Query-Driven Design: The row key must align with how the data will be read. The fastest queries are those that retrieve a contiguous range of rows.

Composite Keys: Use delimited composite keys (e.g.,

user_id#timestamp) to group related data physically together, enabling efficient range scans.Hotspot Avoidance: Keys must be designed to distribute reads and writes evenly across the table space to prevent high-latency “hotspots” in the cluster.

Numerical Padding: Pad numerical values (especially timestamps) with leading zeroes to ensure correct sorting and accurate range retrieval, as these systems often sort lexicographically.

Data Mutability and Governance Challenges

Updates and deletes are complex in OBT architectures because the data is typically stored in immutable, append-optimized file formats. To minimize resource consumption - batch update operations, performing a single large “fat mutation” rather than thousands of small, granular updates.

Use Cases for OBT

OBT should be strategically implemented only when low-latency performance is a critical business driver.

High-Performance Business Intelligence and Interactive Dashboards

For reporting and BI tools where user latency tolerance is near zero, OBT provides fast, sub-second response times under high concurrency. This efficiency is crucial for operational analytics and real-time monitoring applications.

Operational Analytics and Time-Series Data

For massive, continuous data streams like clickstream events or IoT sensor readings, the wide-column OBT pattern is ideal. These systems are designed to manage billions of rows and thousands of columns with extremely high read and write throughput.

OBT as the Machine Learning Feature Store Foundation

The OBT model is the backbone of a Machine Learning (ML) Feature Store. ML models require a feature vector - an inherently wide, denormalized row of data. OBT perfectly matches this structure, providing the fast, consistent access needed for both large-scale training and real-time prediction serving.

OBT Implementation Challenges

The Rigidity of the ELT Pipeline

The ELT pipeline required to generate OBT is highly resource-intensive. When business logic or source schema changes, the denormalized OBT often requires expensive backfilling (recomputing the entire historical dataset).

Mitigation: Treat the OBT as a materialized view derived from a stable, core data model. This allows the OBT to be regenerated modularly without disrupting the entire data platform.

Analytical Pitfalls and Governance

The most common mistake is the “universal OBT” anti-pattern - a single giant table intended to serve all business needs. This approach merges disparate business processes (like purchasing and marketing) into one structure, leading to confusion, lost context, and analytical misinterpretation.

Mitigation: Avoid the one-size-fits-all approach. Data teams should create multiple, focused OBTs, each tailored to a specific business domain or analytical purpose.